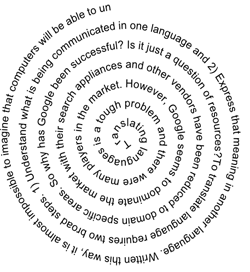

Translating languages is a tough problem and there were many players in the market. However, Google seems to dominate the market with their search appliances and other vendors have been reduced to domain specific areas. So why has Google been successful? Is it just a question of resources?

To translate language requires two broad steps. 1) Understand what is being communicated in one language and 2) Express that meaning in another language. Written this way, it is almost impossible to imagine that computers will be able to understand meaning and then communicate meaning. To reduce the problem to more simplistic terms, machine translation has proceeded along simplistic lines.

Word substitution: If a dictionary exists then it is possible to substitute words. However, very often all the meaning is lost so this does not work very well.

Grammar and word substitution: In this case, grammar rules are used to substitute words. Again this is better than just word substitution but again if the nouns are not specifically marked then the process may not work.

Statistical probability based: Once you have a list of nouns and also some understanding of what words generally follow the other, then you can combine the grammar with statistical probability based context and use that for translation. This works well and what most companies use.

Comparison of translated work: This is where Google shines. They have a large collection of documents that have been translated that can serve as a good comparison. Combine that with some basic grammar rules and you have a reasonably accurate engine. This requires serves that are looking up large databases of written work but that is exactly what Google has available.

More information on Machine translation at Machine translation in America’s and Wikipedia.